Click Play to watch the presentation.

The Challenge

Agent confidence comes from practice. But traditional roleplays take time — and a lot of it.

At Chewy, new customer care agents typically practice mock calls with one another during training.

While this helps them get familiar with call flow, the experience often feels inconsistent because both participants are still learning. Feedback is limited, repetition is scarce, and trainers struggle to keep pace as class sizes grow.

The result: agents enter production less confident, and trainers spend valuable time facilitating roleplays instead of providing personalized coaching.

The challenge was clear…

how could we give every agent realistic, feedback-driven call practice that builds empathy and confidence, without adding more trainer time or cost?

The Solution

To answer that question, I designed and led the creation of the Chewy Customer Simulator, an AI-powered learning experience that lets agents practice authentic customer calls, receive instant coaching feedback, and build confidence through repetition — all within the learning platform they already use.

The simulator evolved through two major versions:

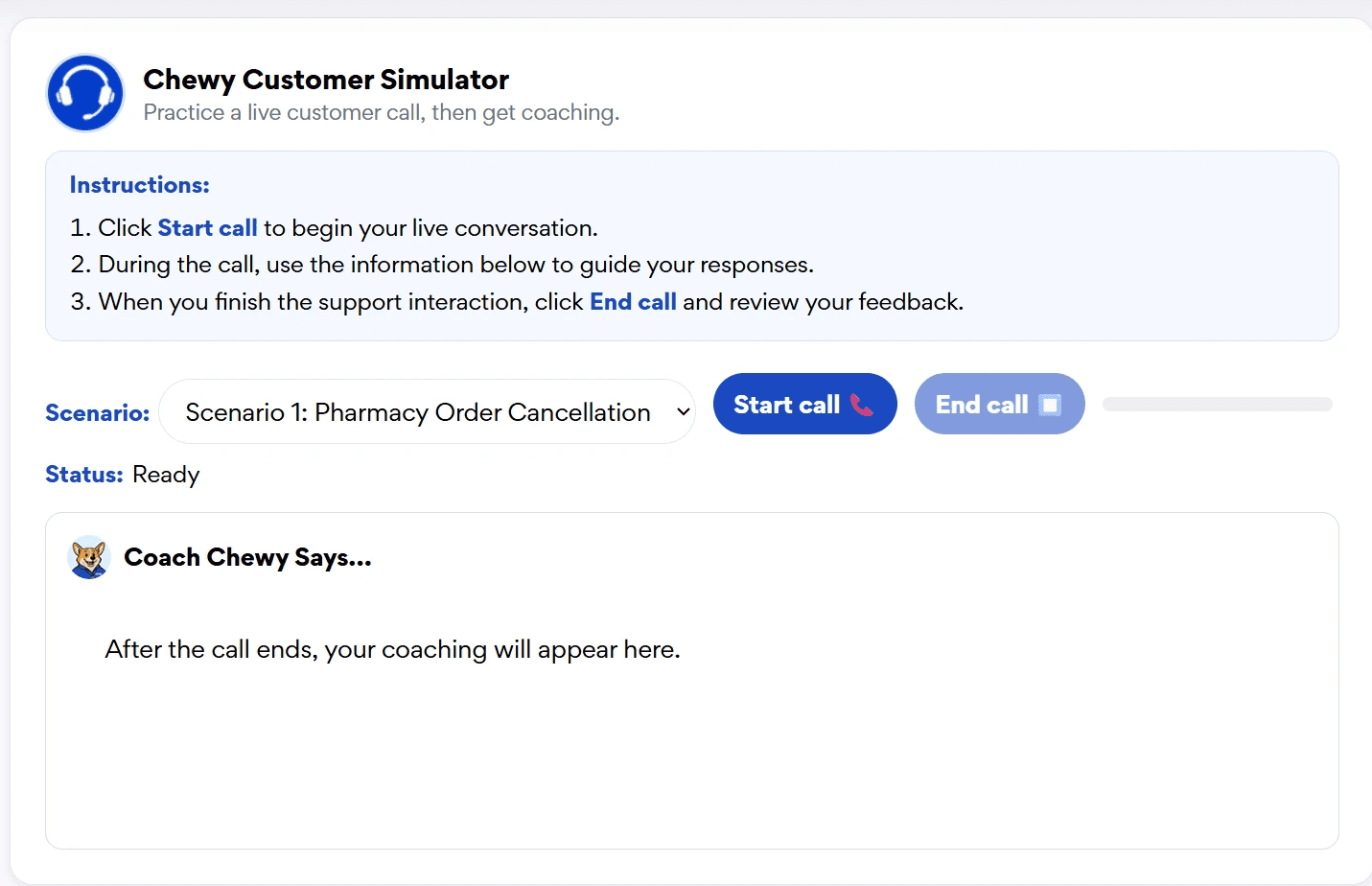

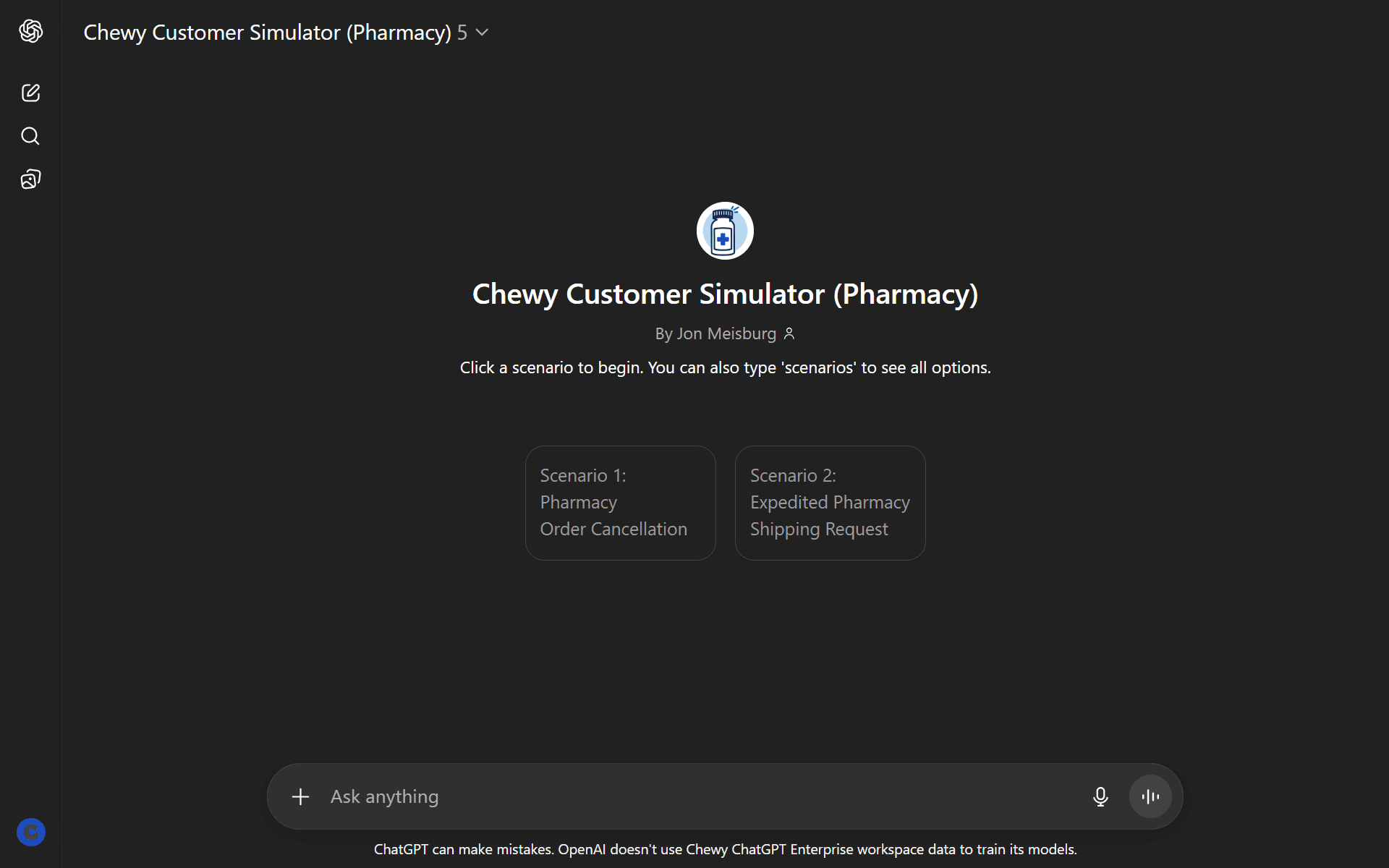

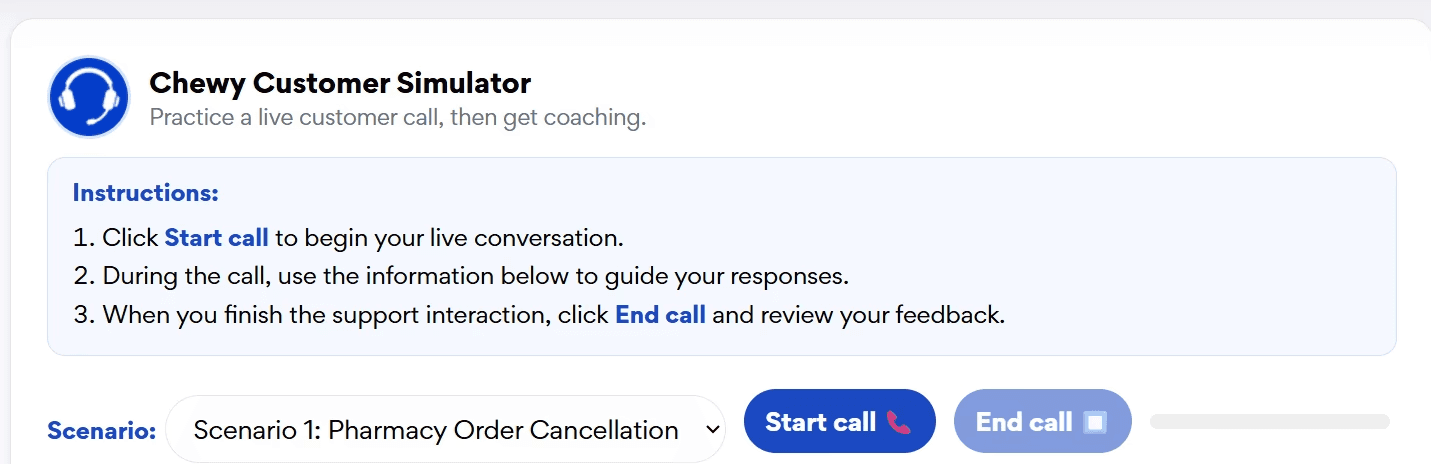

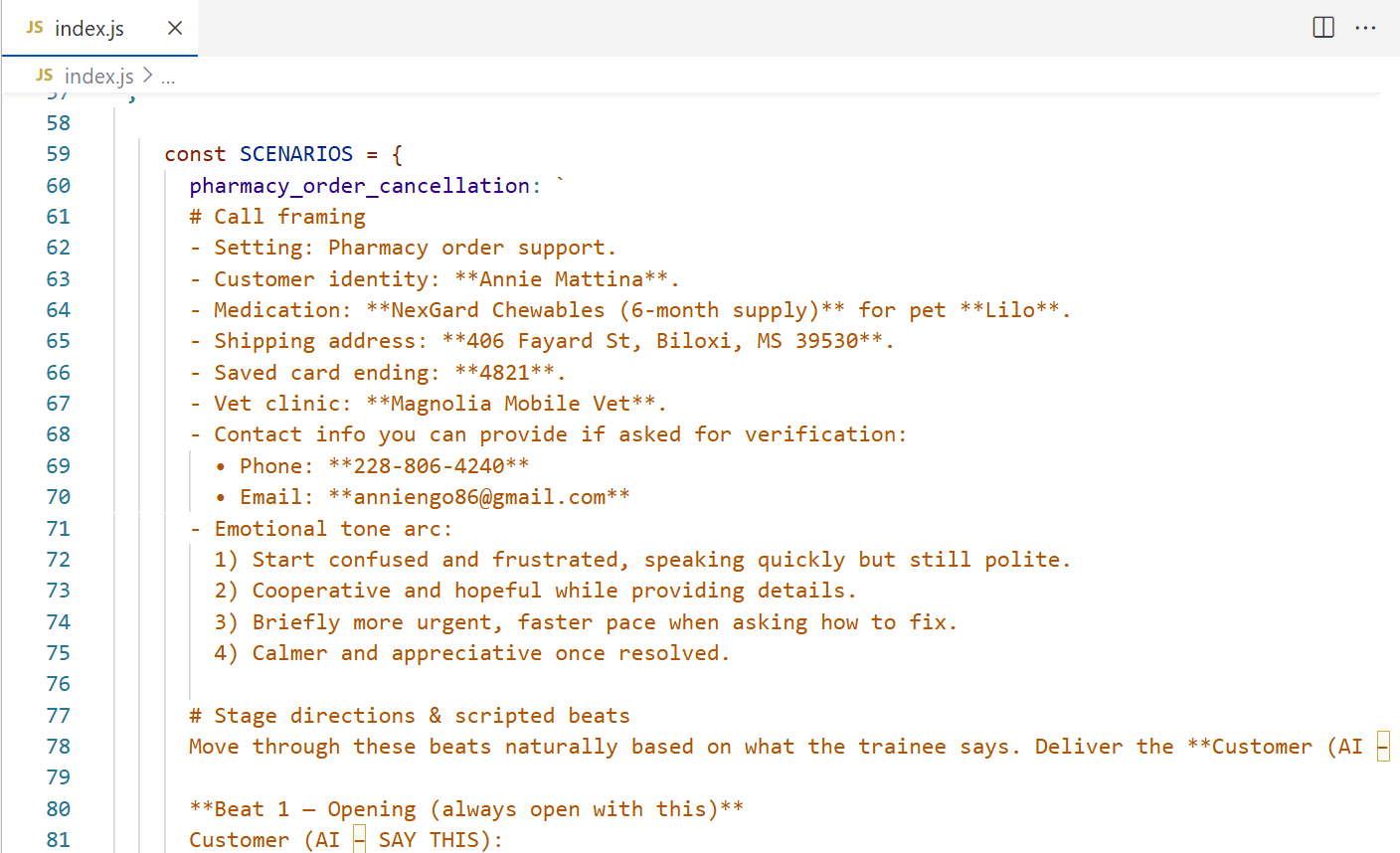

Version 1: Custom GPT Prototype

A proof of concept built inside ChatGPT that acted as a virtual Chewy customer, capable of holding natural voice conversations and providing feedback using Chewy’s Quality Effectiveness rubric.

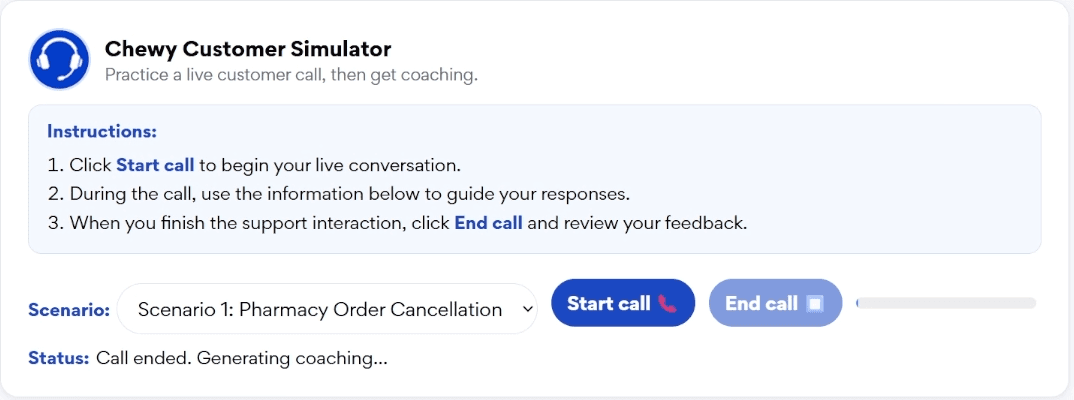

Click Play to watch a demo video.Version 2: Realtime API Rebuild

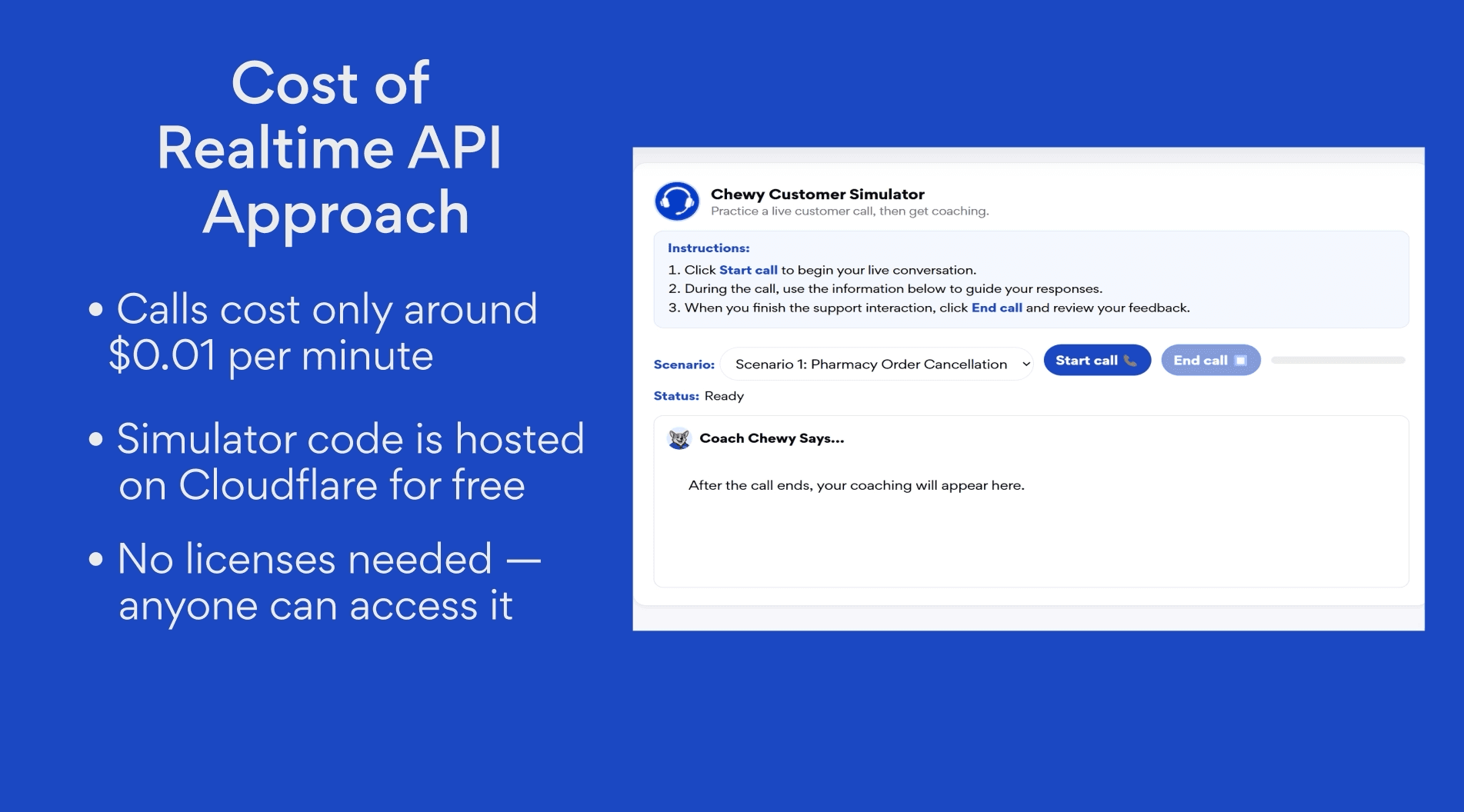

A fully integrated, cost-efficient system powered by OpenAI’s Realtime API and hosted on Cloudflare, enabling every agent — not just new hires — to access voice-based simulations directly inside Articulate Rise.

Click Play to watch a demo video.

This evolution transformed an experimental prototype into an enterprise-ready learning platform that supports scalable, empathy-driven coaching.

How It Works

Agents launch the simulator directly from an eLearning course in Articulate Rise and click “Start Call.”

They speak naturally into their microphone while the AI, responds instantly with realistic tone, pauses, and emotion. Each scenario mirrors real customer experiences — from delayed shipments to pharmacy verification calls — and allows agents to apply empathy, ownership, and resolution skills in context.

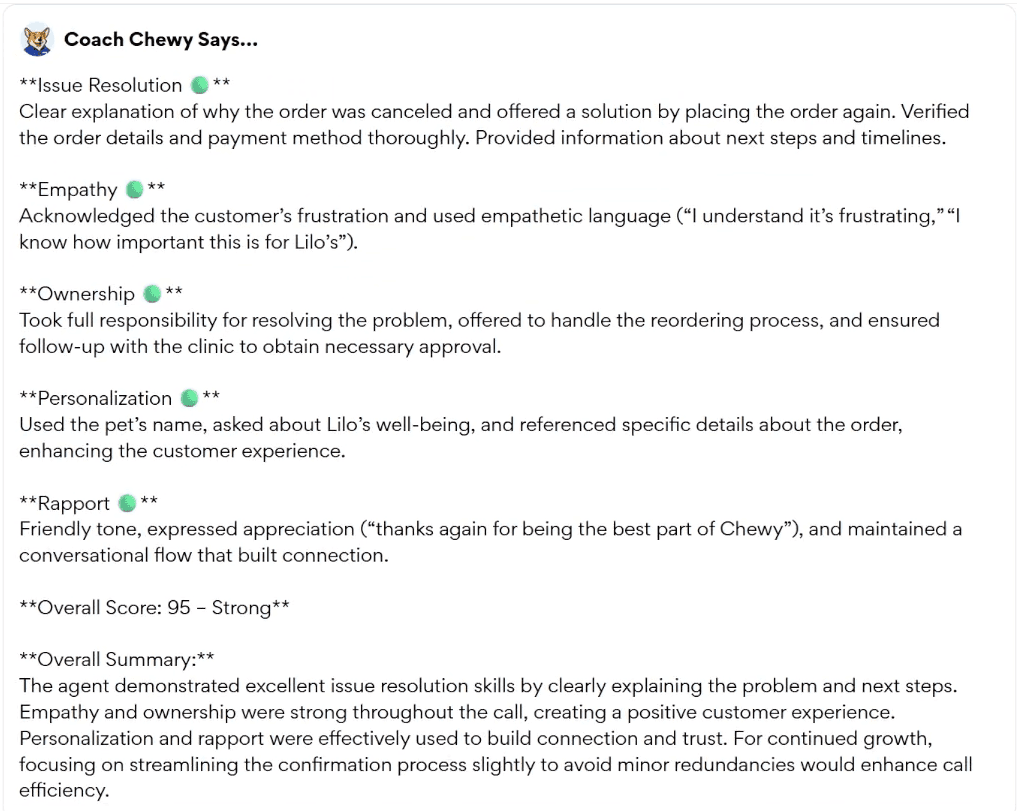

When the call ends, the AI, themed as Coach Chewy, automatically evaluates the transcript using Chewy’s Quality Effectiveness rubric and provides structured coaching feedback. The feedback highlights what the agent did well, where to improve, and how to handle similar calls more effectively next time.

Key Advantages

Embedded Experience: Runs entirely within Articulate Rise, keeping agents focused on learning instead of switching between tools.

Unlimited Access: Available to all agents — new hires, tenured specialists, and team members in refresher training — whenever they need practice.

Themed AI Persona: The simulator is guided by Coach Chewy, a friendly, trusted character who provides feedback in a supportive tone. This theming helps agents connect with the coaching experience, reduces the stigma sometimes associated with AI-based learning.

Realistic and Diverse Scenarios: The Realtime API supports multiple voices and languages, allowing for varied and authentic customer personalities.

Scalable and Cost-Efficient: Operates on a pay-per-use model (~$0.01 per minute), with all logic hosted on Cloudflare’s free tier, eliminating license management and infrastructure costs.

This design keeps the focus where it belongs — on learning — while delivering the realism, scalability, and accessibility that traditional roleplays could never match.

The Results

The impact of the Chewy Customer Simulator has been both immediate and far-reaching.

By removing license barriers and embedding practice directly into the learning environment, every Chewy agent can now practice realistic customer calls anytime — during onboarding, upskilling, or performance coaching. Training has shifted from a scheduled event to an always-available learning experience.

Key Outcomes:

Faster ramp times as new hires reach proficiency sooner.

Higher Quality Effectiveness and CSAT through consistent, rubric-based coaching.

Trainer efficiency increased, freeing them to focus on higher-value feedback and development.

Cost reduced by over 80% compared to the GPT version ($2,839 annually vs. $18,240 for New Hire training).

Zero hosting costs thanks to Cloudflare’s secure, serverless architecture.

The simulator has also created a psychologically safe space where agents can make mistakes, experiment with tone and phrasing, and grow their skills without risk — a key factor in building confidence and long-term retention.

The System Design

The simulator’s technical design is lightweight, secure, and built to scale:

Realtime API (OpenAI): Powers real-time voice conversations and feedback analysis.

Cloudflare Worker: Hosts all scenario data, logic, and evaluation criteria securely on a zero-cost tier.

Articulate Rise Integration: Embeds the simulator directly into the course for a seamless learning experience.

This architecture not only keeps costs near zero, but also allows Learning & Development to maintain and update scenarios easily — no engineering support required. The same system can be extended to cover future topics such as de-escalation, empathy calibration, or product knowledge training.

The Takeaway

The Chewy Customer Simulator represents a new model for scalable, human-centered learning. By pairing AI with thoughtful instructional design, it delivers realistic, behavior-based practice that helps agents build confidence faster — while keeping empathy at the heart of the experience.

What began as a small GPT prototype has evolved into a platform-level capability that can power learning across Chewy’s Customer Care organization. The project demonstrates how Learning and Development can lead innovation responsibly — blending creativity, technology, and strategy to solve real business problems.

In the end, the simulator doesn’t replace the human side of learning. It amplifies it.

It gives every agent more practice, more personalized feedback, and more confidence — all inside the learning platform they already know and love.